Blog

9 things I learned working as a Data Scientist in Healthcare

November 08, 2019

Machine learning has shown exciting results in the last few years across a number of different fields, ranging from autonomous vehicles to virtual personal assistants. One of the most notable applications is healthcare, where researchers have developed promising AI solutions to improve the field, such as an end-to-end lung cancer screening system and discovering potential causes of autism by genome sequencing.

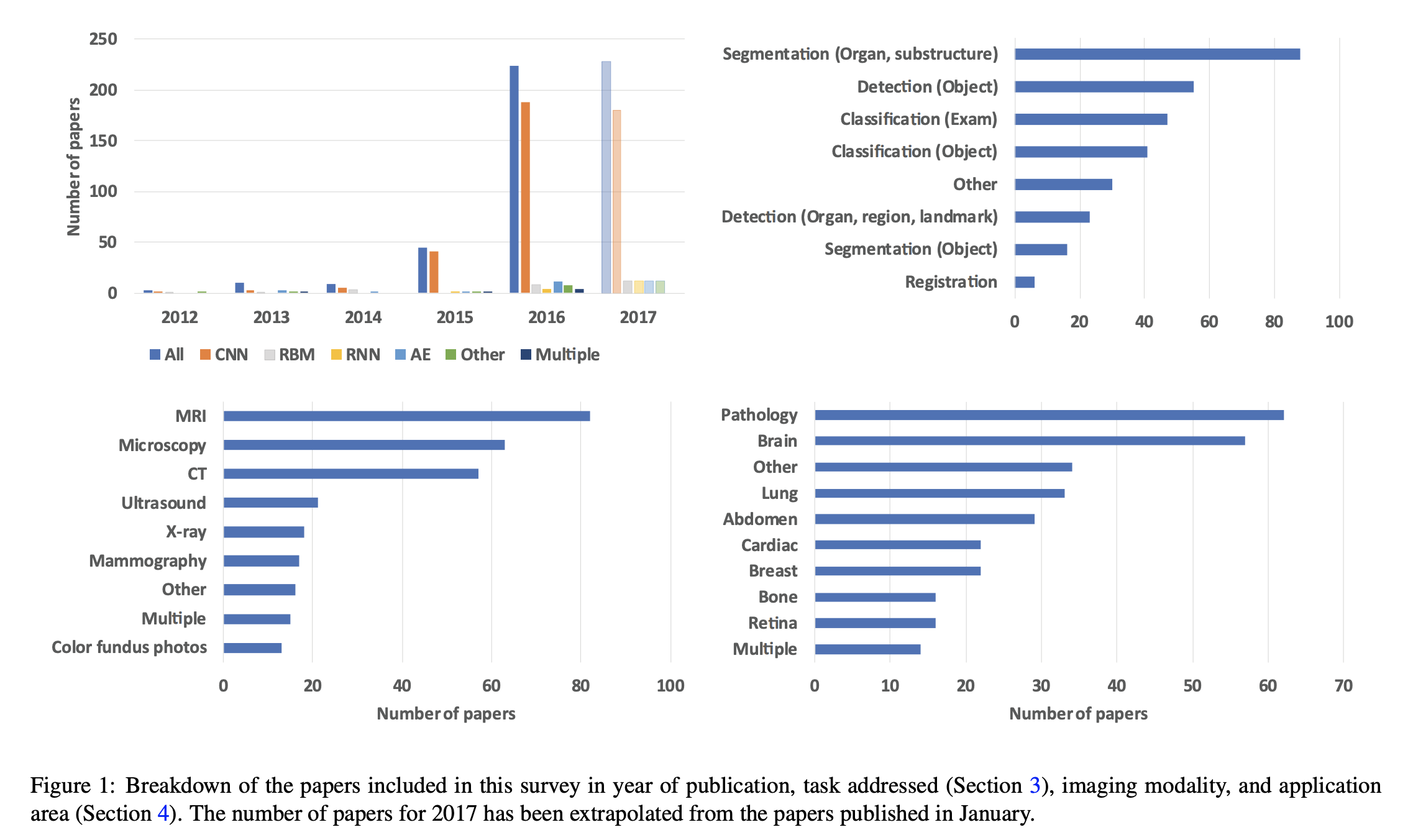

The figure below shows the rise of Convolutional Neural Networks (CNNs) in medical imaging research in recent years and most popular medical subfields by number of papers published.

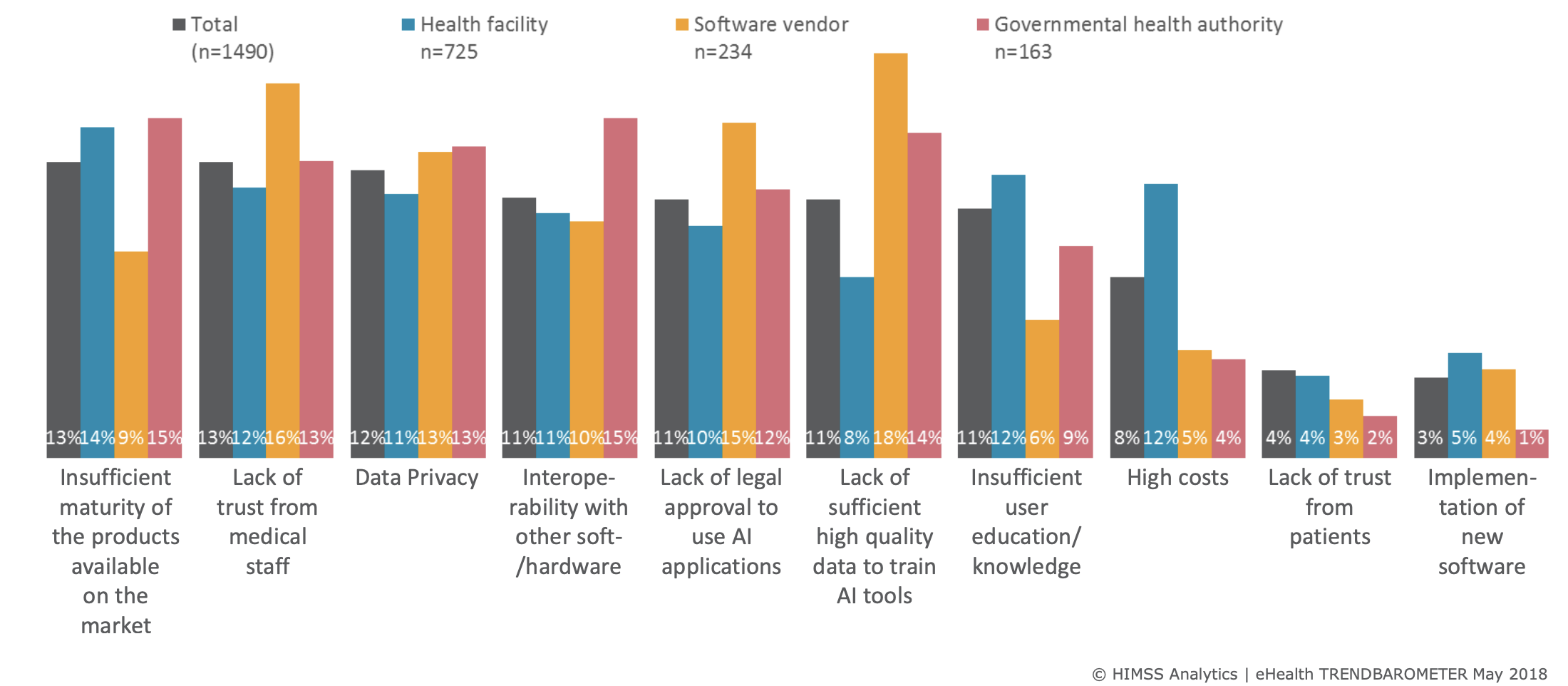

However, there are still some challenges to overcome when it comes to AI adoption in the healthcare industry.

Disclaimer: Most of it reflects my experience working in an AI startup in medical imaging for 2 years and the views are my own.

1. A need for interpretability and robustness

Despite a lot of buzz and excitement around the area, there is still a lot of skepticism when it comes to integrating machine learning systems in clinical practice and for good reason. Medical practitioners are incredibly busy and cannot allocate the time to learn machine learning or how to interpret and understand the metrics, therefore there has been an increasing demand for models and software systems that are:

(a) easily interpretable e.g. visual indicators of what the models are learning or what regions they consider anomalous;

(b) trustworthy i.e. backed by robust validation across populations and from various sources/sites.

2. What is the added value of ML systems?

Most of the current value in AI in healthcare is increased patient time for doctors and being able to prioritise important cases first. These systems are not here to replace doctors, but instead to help them make more informed and quicker decisions. Other benefits are more personalised care, and new discoveries, that might not be obvious to the human eye, such as predicting cardiovascular risk from retinal images.

This is still a fairly speculative topic outside of research, since there have been little to no studies to show these benefits in practice. This is mostly due to the long process it takes for companies to get regulatory approval for their products and incorporate it effectively in healthcare systems.

3. Importance of patient privacy and encryption

Data is not a big problem in healthcare, there’s a ton of it. An important hurdle, however, is accessing and organising the data in an encrypted, anonymised way. In addition, labelled data holds a lot more value than unlabelled, and high quality labels are expensive both in terms of budget and time.

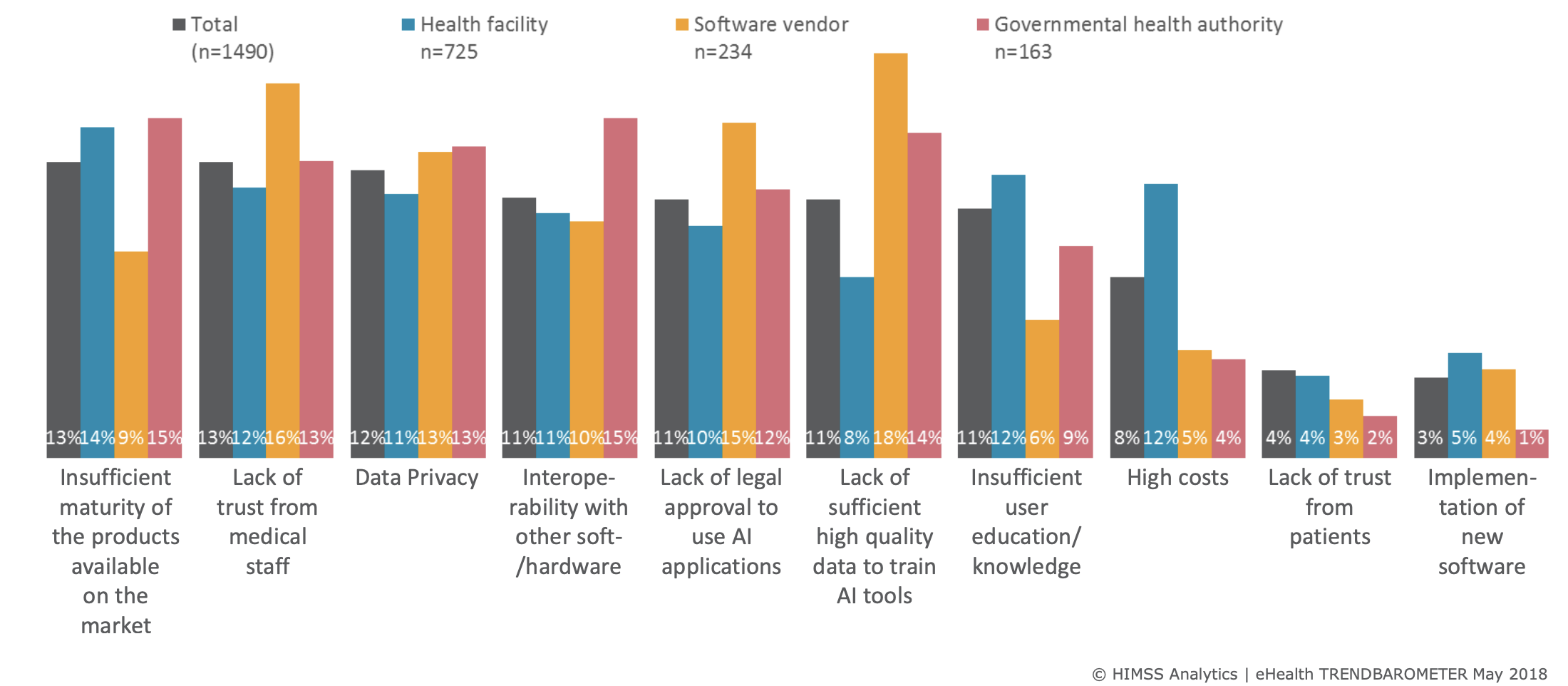

Figure showing some challenges facing AI adoption in Medicine

HIMSS Analytics | eHealth TRENDBAROMETER May 2018

Figure showing some challenges facing AI adoption in Medicine

HIMSS Analytics | eHealth TRENDBAROMETER May 2018

4. Public engagement

There’s still some distrust from the public on how their personal data is used, hence, open and transparent conversations need to happen with the public. A notable example of public scrutiny happened in 2017 when Google DeepMind in collaboration with Royal Free Hospital used personal patient data without explicit consent, thus failing to comply with the Data Protection Act.

5. Regulatory approval

Most healthcare practitioners agree thorough regulation is needed. However, regulatory bodies still need to catch up with the field and there are no universally accepted approval guidelines yet. This is still an uncertain area, subject to many potential changes. As of May 2019, only 26 companies have received FDA approval for AI-based application in healthcare with 14 of those in 2019.

A lot of deep learning systems are perceived as black boxes, thus companies need to balance showing comprehensive studies proving that their product is robust and safe for the general population without giving up company IP when showing the scientific methods used.

6. Domain knowledge ⇔ Technical Expertise

Machine learning developers and engineers need to work alongside medical practitioners to bridge the domain knowledge gaps both ways. Medical terminology can often be quite obscure, thus having a medical expert explaining to you what to look for clinically and how different features are useful for the problem at hand is particularly valuable. Reciprocally, explaining ML results to clinicians can help them guide you further in improving your algorithm.

Technical experts can develop really impressive models that have little to no clinical utility. Likewise, medical experts cannot be expected to always understand model limitations. More collaboration both ways can only result in better integration of ML systems in clinical practice.

7. Model fairness and biases

Biases in model predictions are usually human biases. Either in the data given, the way of testing, or the problem statement itself. There’s a lot of biases & noise in our own mental representations we need to realise first to ensure we don’t instill them in our models.

An example of such biases show how data can be skewed towards a particular subset of the population, usually coming from only one source. Little variance in your data means less generalizability and lower accuracy results in test examples that look different than what was used for training.

8. More research required in unsupervised learning

On the machine learning research side, more attention is given to developing unsupervised or few shot learning models that don’t require costly human labels. There are some promising studies that show how representation learning can be leveraged to minimise the number of labels, such as unsupervised segmentation of 3D CT medical images shown by Moriya et al. and the work done by Hussein et al. in unsupervised lung and pancreatic tumour classification.

9. An attitude shift from doctors towards embracing Machine Learning in Healthcare

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

Despite a lot of buzz and excitement around the area, there is still a lot of skepticism when it comes to integrating machine learning systems in clinical practice and for good reason. Medical practitioners are incredibly busy and cannot allocate the time to learn machine learning or how to interpret and understand the metrics, therefore there has been an increasing demand for models and software systems that are:

(a) easily interpretable e.g. visual indicators of what the models are learning or what regions they consider anomalous;

(b) trustworthy i.e. backed by robust validation across populations and from various sources/sites.

2. What is the added value of ML systems?

Most of the current value in AI in healthcare is increased patient time for doctors and being able to prioritise important cases first. These systems are not here to replace doctors, but instead to help them make more informed and quicker decisions. Other benefits are more personalised care, and new discoveries, that might not be obvious to the human eye, such as predicting cardiovascular risk from retinal images.

This is still a fairly speculative topic outside of research, since there have been little to no studies to show these benefits in practice. This is mostly due to the long process it takes for companies to get regulatory approval for their products and incorporate it effectively in healthcare systems.

3. Importance of patient privacy and encryption

Data is not a big problem in healthcare, there’s a ton of it. An important hurdle, however, is accessing and organising the data in an encrypted, anonymised way. In addition, labelled data holds a lot more value than unlabelled, and high quality labels are expensive both in terms of budget and time.

Figure showing some challenges facing AI adoption in Medicine

HIMSS Analytics | eHealth TRENDBAROMETER May 2018

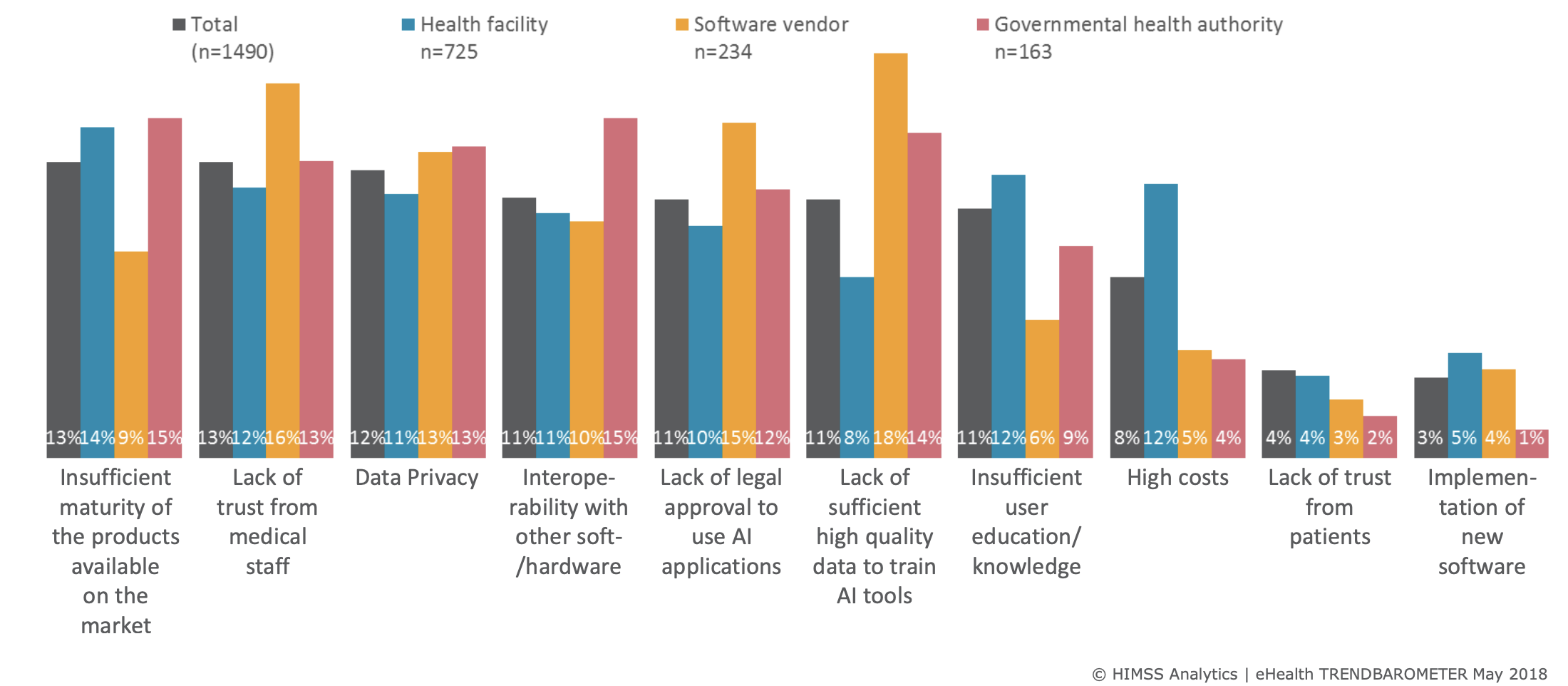

Figure showing some challenges facing AI adoption in Medicine

HIMSS Analytics | eHealth TRENDBAROMETER May 2018

4. Public engagement

There’s still some distrust from the public on how their personal data is used, hence, open and transparent conversations need to happen with the public. A notable example of public scrutiny happened in 2017 when Google DeepMind in collaboration with Royal Free Hospital used personal patient data without explicit consent, thus failing to comply with the Data Protection Act.

5. Regulatory approval

Most healthcare practitioners agree thorough regulation is needed. However, regulatory bodies still need to catch up with the field and there are no universally accepted approval guidelines yet. This is still an uncertain area, subject to many potential changes. As of May 2019, only 26 companies have received FDA approval for AI-based application in healthcare with 14 of those in 2019.

A lot of deep learning systems are perceived as black boxes, thus companies need to balance showing comprehensive studies proving that their product is robust and safe for the general population without giving up company IP when showing the scientific methods used.

6. Domain knowledge ⇔ Technical Expertise

Machine learning developers and engineers need to work alongside medical practitioners to bridge the domain knowledge gaps both ways. Medical terminology can often be quite obscure, thus having a medical expert explaining to you what to look for clinically and how different features are useful for the problem at hand is particularly valuable. Reciprocally, explaining ML results to clinicians can help them guide you further in improving your algorithm.

Technical experts can develop really impressive models that have little to no clinical utility. Likewise, medical experts cannot be expected to always understand model limitations. More collaboration both ways can only result in better integration of ML systems in clinical practice.

7. Model fairness and biases

Biases in model predictions are usually human biases. Either in the data given, the way of testing, or the problem statement itself. There’s a lot of biases & noise in our own mental representations we need to realise first to ensure we don’t instill them in our models.

An example of such biases show how data can be skewed towards a particular subset of the population, usually coming from only one source. Little variance in your data means less generalizability and lower accuracy results in test examples that look different than what was used for training.

8. More research required in unsupervised learning

On the machine learning research side, more attention is given to developing unsupervised or few shot learning models that don’t require costly human labels. There are some promising studies that show how representation learning can be leveraged to minimise the number of labels, such as unsupervised segmentation of 3D CT medical images shown by Moriya et al. and the work done by Hussein et al. in unsupervised lung and pancreatic tumour classification.

9. An attitude shift from doctors towards embracing Machine Learning in Healthcare

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

Most of the current value in AI in healthcare is increased patient time for doctors and being able to prioritise important cases first. These systems are not here to replace doctors, but instead to help them make more informed and quicker decisions. Other benefits are more personalised care, and new discoveries, that might not be obvious to the human eye, such as predicting cardiovascular risk from retinal images.

This is still a fairly speculative topic outside of research, since there have been little to no studies to show these benefits in practice. This is mostly due to the long process it takes for companies to get regulatory approval for their products and incorporate it effectively in healthcare systems.

3. Importance of patient privacy and encryption

Data is not a big problem in healthcare, there’s a ton of it. An important hurdle, however, is accessing and organising the data in an encrypted, anonymised way. In addition, labelled data holds a lot more value than unlabelled, and high quality labels are expensive both in terms of budget and time.

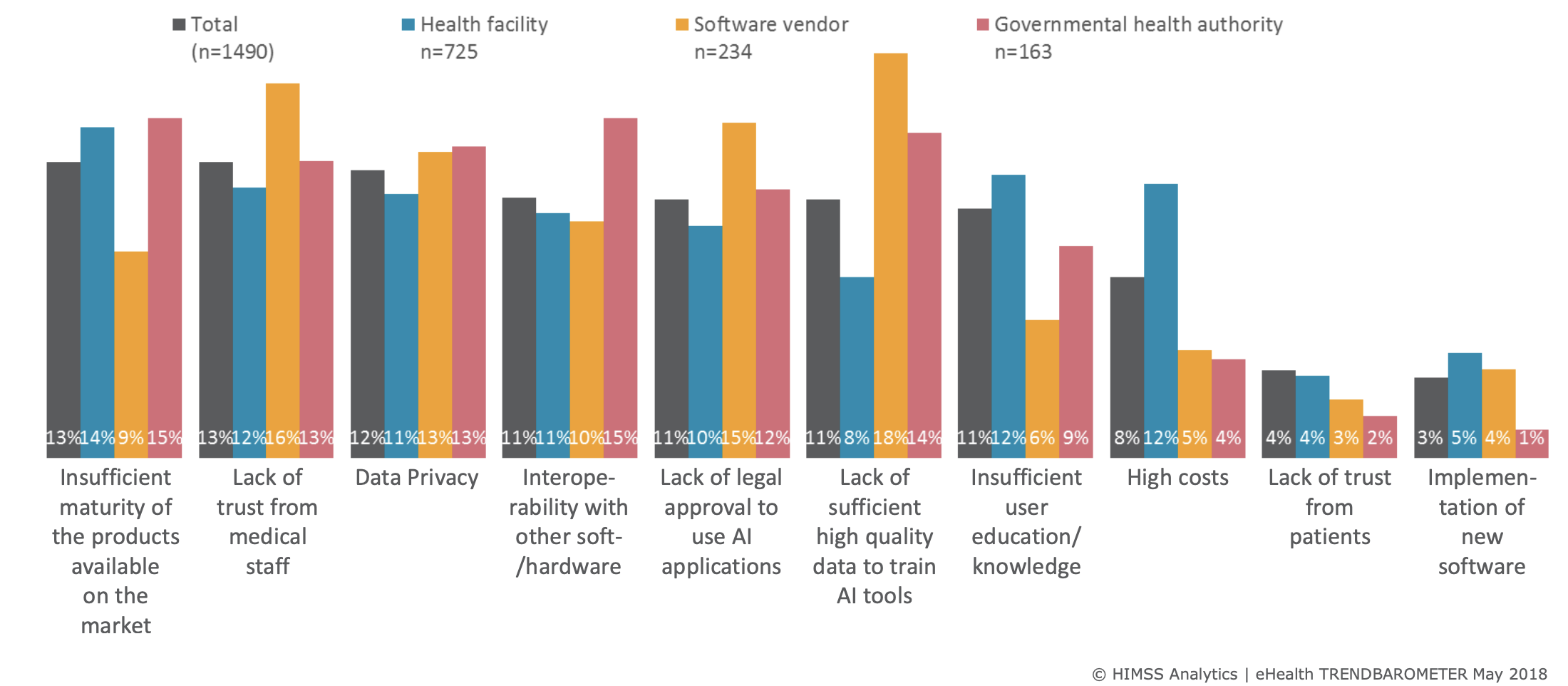

Figure showing some challenges facing AI adoption in Medicine

HIMSS Analytics | eHealth TRENDBAROMETER May 2018

Figure showing some challenges facing AI adoption in Medicine

HIMSS Analytics | eHealth TRENDBAROMETER May 2018

4. Public engagement

There’s still some distrust from the public on how their personal data is used, hence, open and transparent conversations need to happen with the public. A notable example of public scrutiny happened in 2017 when Google DeepMind in collaboration with Royal Free Hospital used personal patient data without explicit consent, thus failing to comply with the Data Protection Act.

5. Regulatory approval

Most healthcare practitioners agree thorough regulation is needed. However, regulatory bodies still need to catch up with the field and there are no universally accepted approval guidelines yet. This is still an uncertain area, subject to many potential changes. As of May 2019, only 26 companies have received FDA approval for AI-based application in healthcare with 14 of those in 2019.

A lot of deep learning systems are perceived as black boxes, thus companies need to balance showing comprehensive studies proving that their product is robust and safe for the general population without giving up company IP when showing the scientific methods used.

6. Domain knowledge ⇔ Technical Expertise

Machine learning developers and engineers need to work alongside medical practitioners to bridge the domain knowledge gaps both ways. Medical terminology can often be quite obscure, thus having a medical expert explaining to you what to look for clinically and how different features are useful for the problem at hand is particularly valuable. Reciprocally, explaining ML results to clinicians can help them guide you further in improving your algorithm.

Technical experts can develop really impressive models that have little to no clinical utility. Likewise, medical experts cannot be expected to always understand model limitations. More collaboration both ways can only result in better integration of ML systems in clinical practice.

7. Model fairness and biases

Biases in model predictions are usually human biases. Either in the data given, the way of testing, or the problem statement itself. There’s a lot of biases & noise in our own mental representations we need to realise first to ensure we don’t instill them in our models.

An example of such biases show how data can be skewed towards a particular subset of the population, usually coming from only one source. Little variance in your data means less generalizability and lower accuracy results in test examples that look different than what was used for training.

8. More research required in unsupervised learning

On the machine learning research side, more attention is given to developing unsupervised or few shot learning models that don’t require costly human labels. There are some promising studies that show how representation learning can be leveraged to minimise the number of labels, such as unsupervised segmentation of 3D CT medical images shown by Moriya et al. and the work done by Hussein et al. in unsupervised lung and pancreatic tumour classification.

9. An attitude shift from doctors towards embracing Machine Learning in Healthcare

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

Data is not a big problem in healthcare, there’s a ton of it. An important hurdle, however, is accessing and organising the data in an encrypted, anonymised way. In addition, labelled data holds a lot more value than unlabelled, and high quality labels are expensive both in terms of budget and time.

Figure showing some challenges facing AI adoption in Medicine

HIMSS Analytics | eHealth TRENDBAROMETER May 2018

Figure showing some challenges facing AI adoption in Medicine

HIMSS Analytics | eHealth TRENDBAROMETER May 2018

4. Public engagement

There’s still some distrust from the public on how their personal data is used, hence, open and transparent conversations need to happen with the public. A notable example of public scrutiny happened in 2017 when Google DeepMind in collaboration with Royal Free Hospital used personal patient data without explicit consent, thus failing to comply with the Data Protection Act.

5. Regulatory approval

Most healthcare practitioners agree thorough regulation is needed. However, regulatory bodies still need to catch up with the field and there are no universally accepted approval guidelines yet. This is still an uncertain area, subject to many potential changes. As of May 2019, only 26 companies have received FDA approval for AI-based application in healthcare with 14 of those in 2019.

A lot of deep learning systems are perceived as black boxes, thus companies need to balance showing comprehensive studies proving that their product is robust and safe for the general population without giving up company IP when showing the scientific methods used.

6. Domain knowledge ⇔ Technical Expertise

Machine learning developers and engineers need to work alongside medical practitioners to bridge the domain knowledge gaps both ways. Medical terminology can often be quite obscure, thus having a medical expert explaining to you what to look for clinically and how different features are useful for the problem at hand is particularly valuable. Reciprocally, explaining ML results to clinicians can help them guide you further in improving your algorithm.

Technical experts can develop really impressive models that have little to no clinical utility. Likewise, medical experts cannot be expected to always understand model limitations. More collaboration both ways can only result in better integration of ML systems in clinical practice.

7. Model fairness and biases

Biases in model predictions are usually human biases. Either in the data given, the way of testing, or the problem statement itself. There’s a lot of biases & noise in our own mental representations we need to realise first to ensure we don’t instill them in our models.

An example of such biases show how data can be skewed towards a particular subset of the population, usually coming from only one source. Little variance in your data means less generalizability and lower accuracy results in test examples that look different than what was used for training.

8. More research required in unsupervised learning

On the machine learning research side, more attention is given to developing unsupervised or few shot learning models that don’t require costly human labels. There are some promising studies that show how representation learning can be leveraged to minimise the number of labels, such as unsupervised segmentation of 3D CT medical images shown by Moriya et al. and the work done by Hussein et al. in unsupervised lung and pancreatic tumour classification.

9. An attitude shift from doctors towards embracing Machine Learning in Healthcare

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

There’s still some distrust from the public on how their personal data is used, hence, open and transparent conversations need to happen with the public. A notable example of public scrutiny happened in 2017 when Google DeepMind in collaboration with Royal Free Hospital used personal patient data without explicit consent, thus failing to comply with the Data Protection Act.

5. Regulatory approval

Most healthcare practitioners agree thorough regulation is needed. However, regulatory bodies still need to catch up with the field and there are no universally accepted approval guidelines yet. This is still an uncertain area, subject to many potential changes. As of May 2019, only 26 companies have received FDA approval for AI-based application in healthcare with 14 of those in 2019.

A lot of deep learning systems are perceived as black boxes, thus companies need to balance showing comprehensive studies proving that their product is robust and safe for the general population without giving up company IP when showing the scientific methods used.

6. Domain knowledge ⇔ Technical Expertise

Machine learning developers and engineers need to work alongside medical practitioners to bridge the domain knowledge gaps both ways. Medical terminology can often be quite obscure, thus having a medical expert explaining to you what to look for clinically and how different features are useful for the problem at hand is particularly valuable. Reciprocally, explaining ML results to clinicians can help them guide you further in improving your algorithm.

Technical experts can develop really impressive models that have little to no clinical utility. Likewise, medical experts cannot be expected to always understand model limitations. More collaboration both ways can only result in better integration of ML systems in clinical practice.

7. Model fairness and biases

Biases in model predictions are usually human biases. Either in the data given, the way of testing, or the problem statement itself. There’s a lot of biases & noise in our own mental representations we need to realise first to ensure we don’t instill them in our models.

An example of such biases show how data can be skewed towards a particular subset of the population, usually coming from only one source. Little variance in your data means less generalizability and lower accuracy results in test examples that look different than what was used for training.

8. More research required in unsupervised learning

On the machine learning research side, more attention is given to developing unsupervised or few shot learning models that don’t require costly human labels. There are some promising studies that show how representation learning can be leveraged to minimise the number of labels, such as unsupervised segmentation of 3D CT medical images shown by Moriya et al. and the work done by Hussein et al. in unsupervised lung and pancreatic tumour classification.

9. An attitude shift from doctors towards embracing Machine Learning in Healthcare

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

Most healthcare practitioners agree thorough regulation is needed. However, regulatory bodies still need to catch up with the field and there are no universally accepted approval guidelines yet. This is still an uncertain area, subject to many potential changes. As of May 2019, only 26 companies have received FDA approval for AI-based application in healthcare with 14 of those in 2019.

A lot of deep learning systems are perceived as black boxes, thus companies need to balance showing comprehensive studies proving that their product is robust and safe for the general population without giving up company IP when showing the scientific methods used.

6. Domain knowledge ⇔ Technical Expertise

Machine learning developers and engineers need to work alongside medical practitioners to bridge the domain knowledge gaps both ways. Medical terminology can often be quite obscure, thus having a medical expert explaining to you what to look for clinically and how different features are useful for the problem at hand is particularly valuable. Reciprocally, explaining ML results to clinicians can help them guide you further in improving your algorithm.

Technical experts can develop really impressive models that have little to no clinical utility. Likewise, medical experts cannot be expected to always understand model limitations. More collaboration both ways can only result in better integration of ML systems in clinical practice.

7. Model fairness and biases

Biases in model predictions are usually human biases. Either in the data given, the way of testing, or the problem statement itself. There’s a lot of biases & noise in our own mental representations we need to realise first to ensure we don’t instill them in our models.

An example of such biases show how data can be skewed towards a particular subset of the population, usually coming from only one source. Little variance in your data means less generalizability and lower accuracy results in test examples that look different than what was used for training.

8. More research required in unsupervised learning

On the machine learning research side, more attention is given to developing unsupervised or few shot learning models that don’t require costly human labels. There are some promising studies that show how representation learning can be leveraged to minimise the number of labels, such as unsupervised segmentation of 3D CT medical images shown by Moriya et al. and the work done by Hussein et al. in unsupervised lung and pancreatic tumour classification.

9. An attitude shift from doctors towards embracing Machine Learning in Healthcare

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

Machine learning developers and engineers need to work alongside medical practitioners to bridge the domain knowledge gaps both ways. Medical terminology can often be quite obscure, thus having a medical expert explaining to you what to look for clinically and how different features are useful for the problem at hand is particularly valuable. Reciprocally, explaining ML results to clinicians can help them guide you further in improving your algorithm.

Technical experts can develop really impressive models that have little to no clinical utility. Likewise, medical experts cannot be expected to always understand model limitations. More collaboration both ways can only result in better integration of ML systems in clinical practice.

7. Model fairness and biases

Biases in model predictions are usually human biases. Either in the data given, the way of testing, or the problem statement itself. There’s a lot of biases & noise in our own mental representations we need to realise first to ensure we don’t instill them in our models.

An example of such biases show how data can be skewed towards a particular subset of the population, usually coming from only one source. Little variance in your data means less generalizability and lower accuracy results in test examples that look different than what was used for training.

8. More research required in unsupervised learning

On the machine learning research side, more attention is given to developing unsupervised or few shot learning models that don’t require costly human labels. There are some promising studies that show how representation learning can be leveraged to minimise the number of labels, such as unsupervised segmentation of 3D CT medical images shown by Moriya et al. and the work done by Hussein et al. in unsupervised lung and pancreatic tumour classification.

9. An attitude shift from doctors towards embracing Machine Learning in Healthcare

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

Biases in model predictions are usually human biases. Either in the data given, the way of testing, or the problem statement itself. There’s a lot of biases & noise in our own mental representations we need to realise first to ensure we don’t instill them in our models.

An example of such biases show how data can be skewed towards a particular subset of the population, usually coming from only one source. Little variance in your data means less generalizability and lower accuracy results in test examples that look different than what was used for training.

8. More research required in unsupervised learning

On the machine learning research side, more attention is given to developing unsupervised or few shot learning models that don’t require costly human labels. There are some promising studies that show how representation learning can be leveraged to minimise the number of labels, such as unsupervised segmentation of 3D CT medical images shown by Moriya et al. and the work done by Hussein et al. in unsupervised lung and pancreatic tumour classification.

9. An attitude shift from doctors towards embracing Machine Learning in Healthcare

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

On the machine learning research side, more attention is given to developing unsupervised or few shot learning models that don’t require costly human labels. There are some promising studies that show how representation learning can be leveraged to minimise the number of labels, such as unsupervised segmentation of 3D CT medical images shown by Moriya et al. and the work done by Hussein et al. in unsupervised lung and pancreatic tumour classification.

9. An attitude shift from doctors towards embracing Machine Learning in Healthcare

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

Despite these challenges, an exciting shift is happening in healthcare and more and more medical practitioners are starting to embrace AI. This is largely due to a greater body of clinical studies that compare the performances between clinical experts and machine learning models and show comparable results. More studies are pending that will show the real clinical usefulness of such systems.

Further resources:

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety

BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.

- Do no harm: a roadmap for responsible machine learning for health care, Nature Article

- Eric Topol’s Deep medicine: how artificial intelligence can make healthcare human again. Amazon

- Luke Oakden Rayner’s blog

- Artificial intelligence, bias and clinical safety BMJ Journals

- If AI is going to be the world’s doctor, it needs better textbooks Quartz Prescription AI Series

- Artificial intelligence in healthcare: past, present and future BMJ Journals

- High-performance medicine: the convergence of human and artificial intelligence Nature Article

I mostly write about AI and machine learning.